Kubernetes

Overview

Kubernetes enables running and orchestrating multiple software containers.

Setup Kubernetes Cluster on Oracle Cloud using OKE

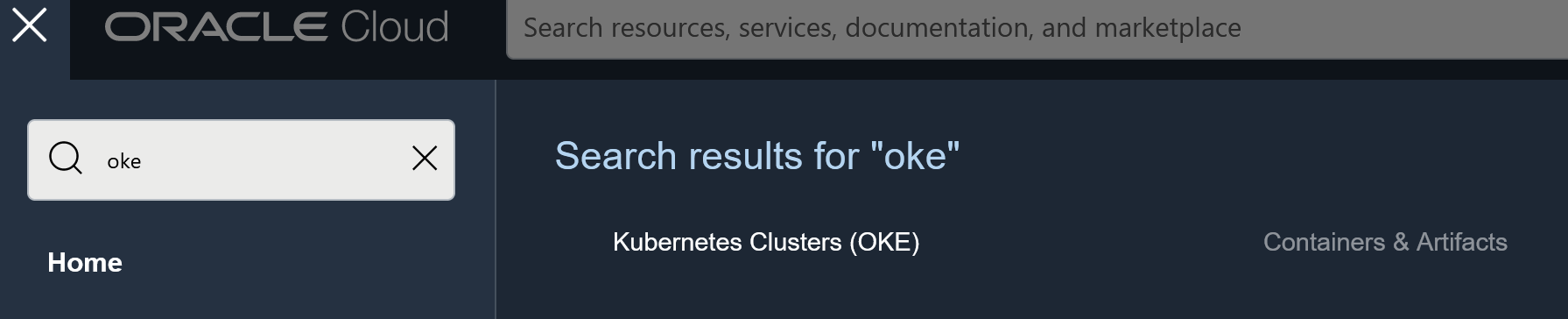

Search for OKE in the menu and then go to Kubernetes Clusters (OKE) under Containers & Artifacts:

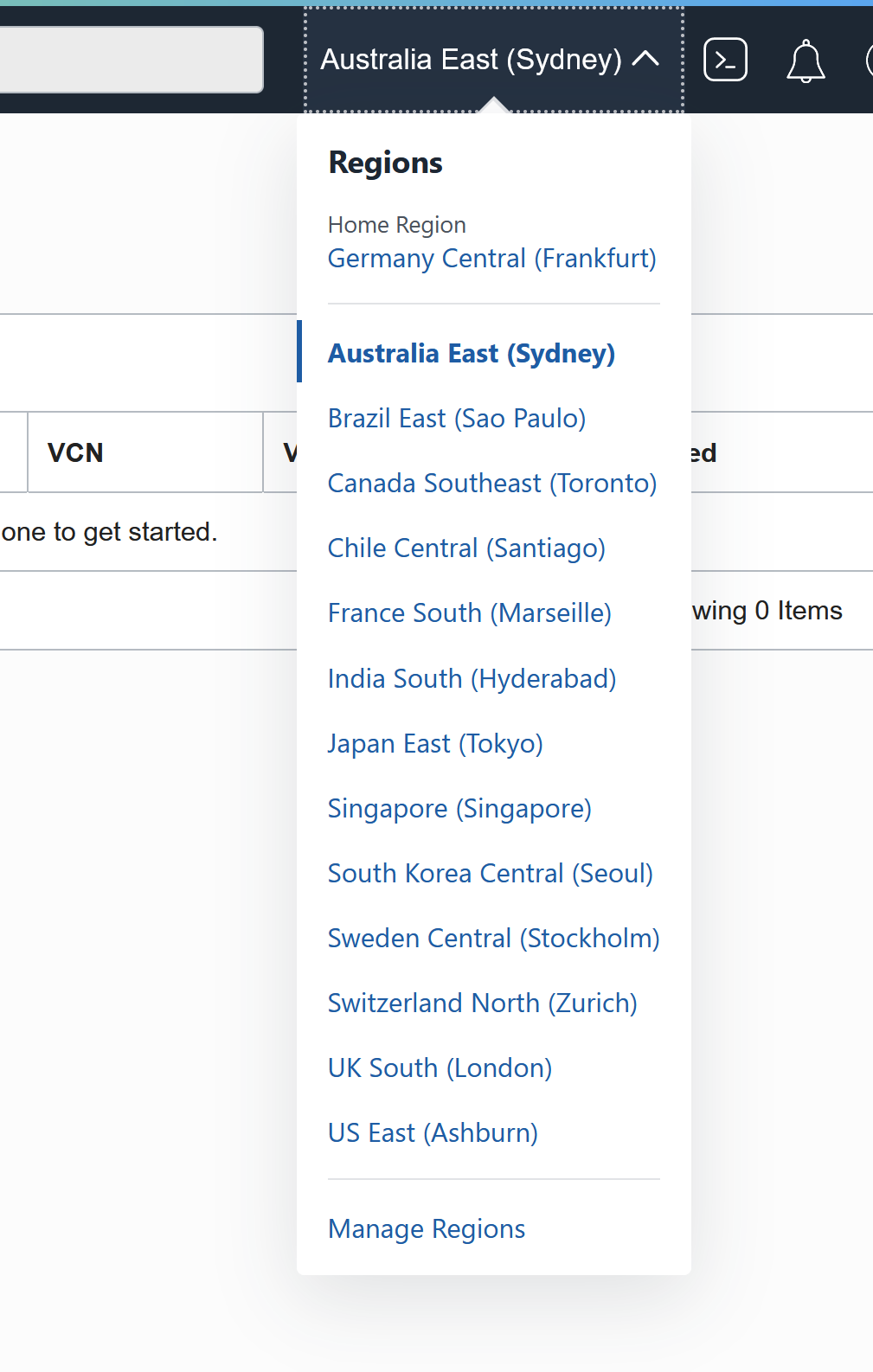

Select the region where you would like to create the Cluster:

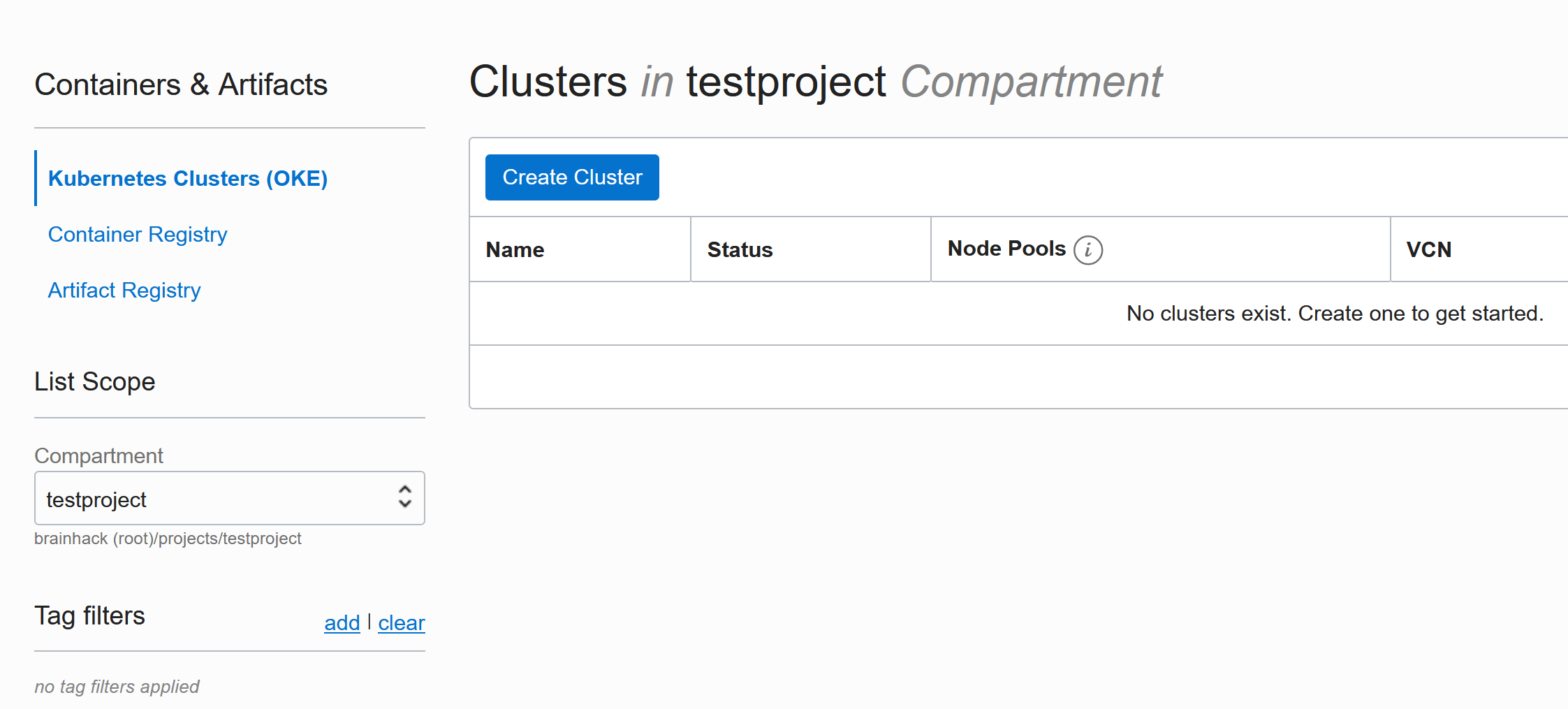

Select the compartment where you would like to create the Cluster:

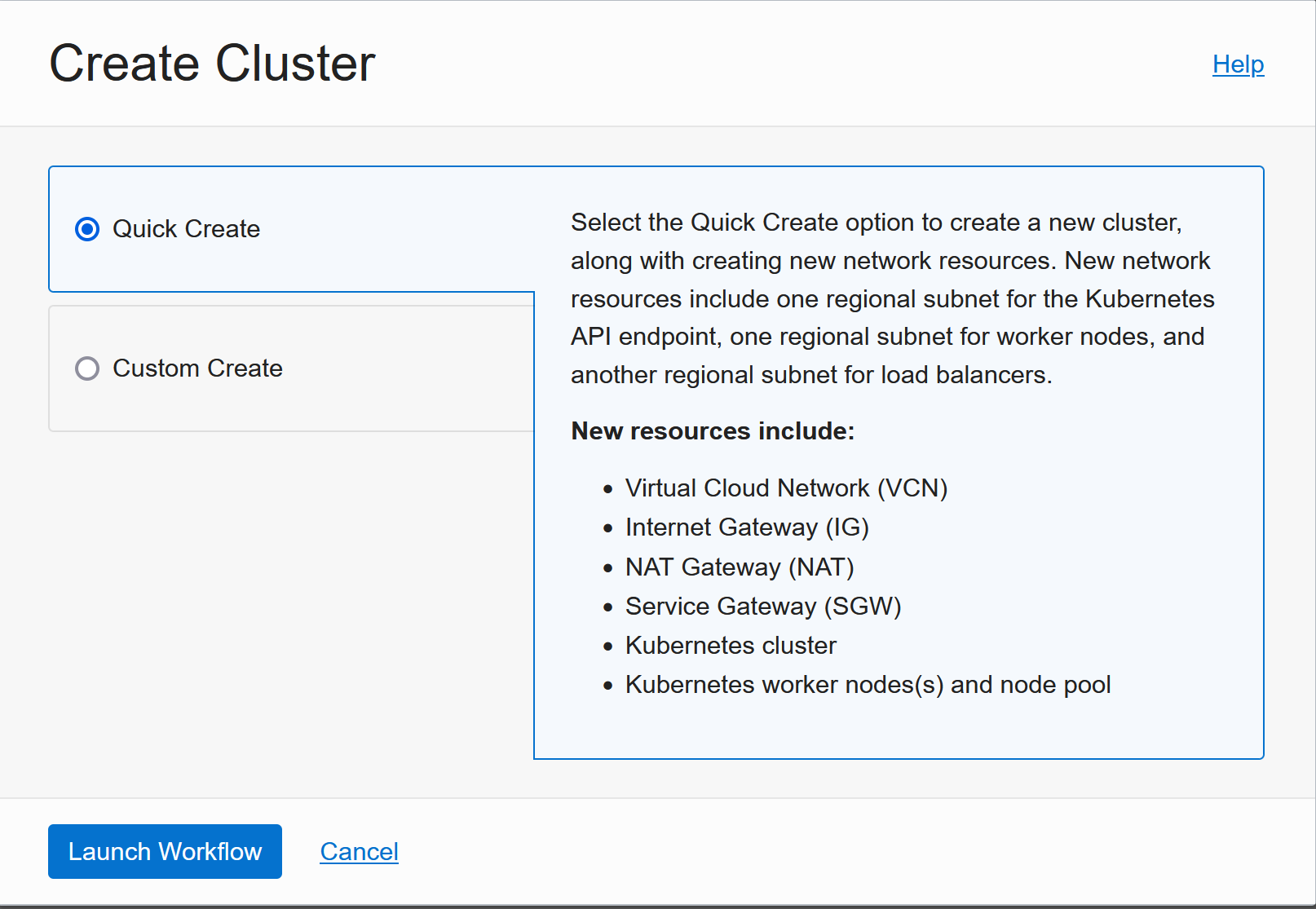

Quick Create is great and gives a good starting point that works for most applications:

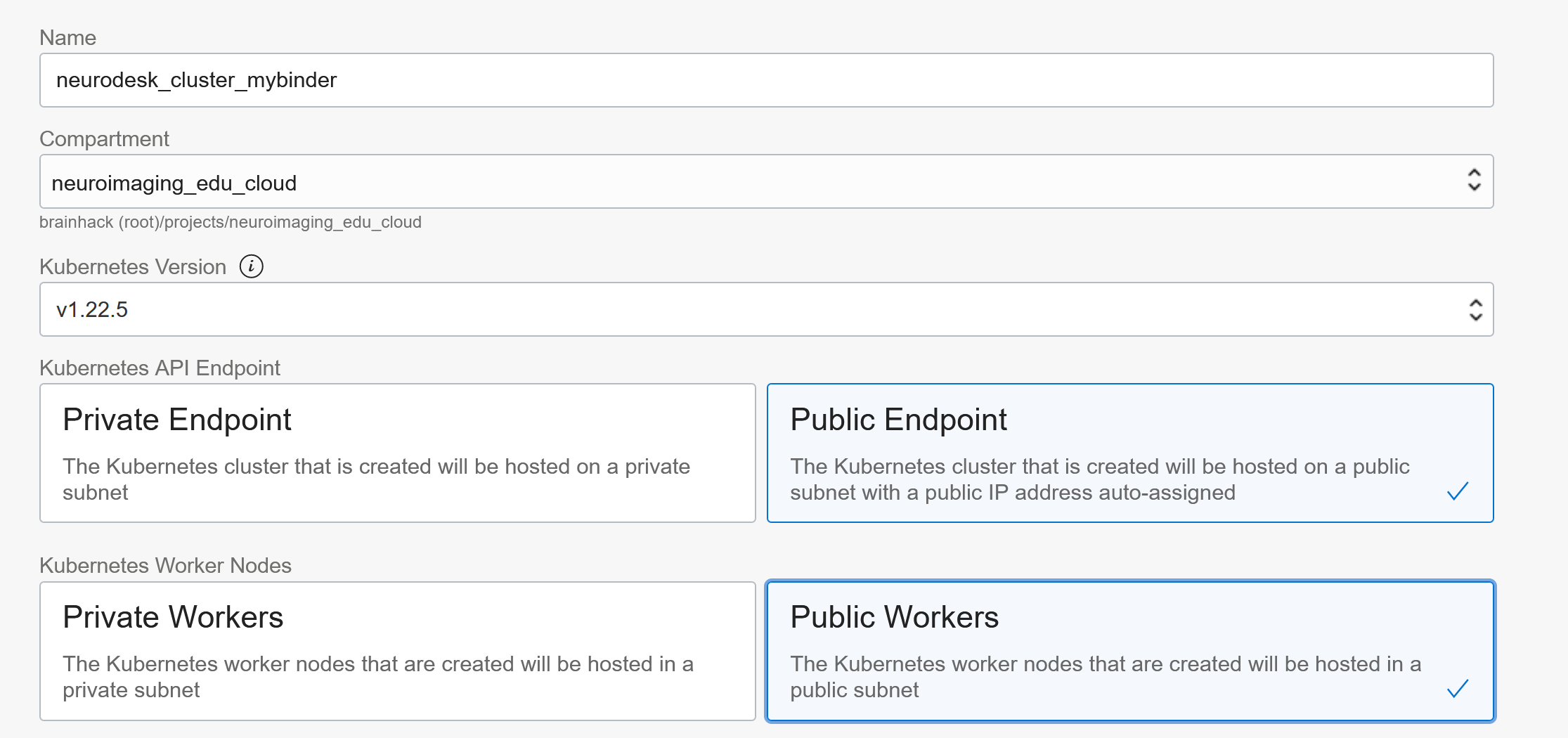

The defaults are sensible, but Public Workers make it easier to troubleshoot things in the beginning:

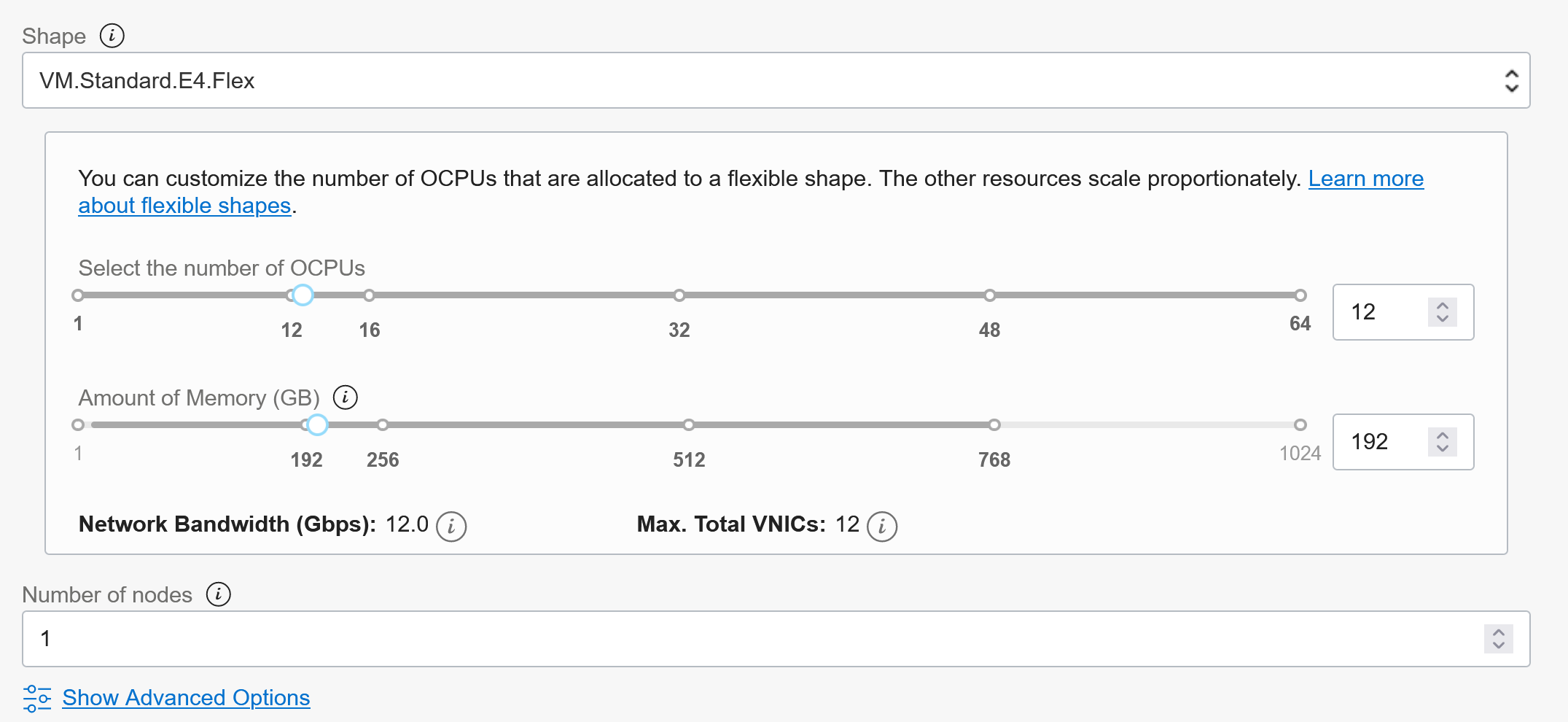

Select the shape you like and 1 worker is good to start (can be increased and changed later!)

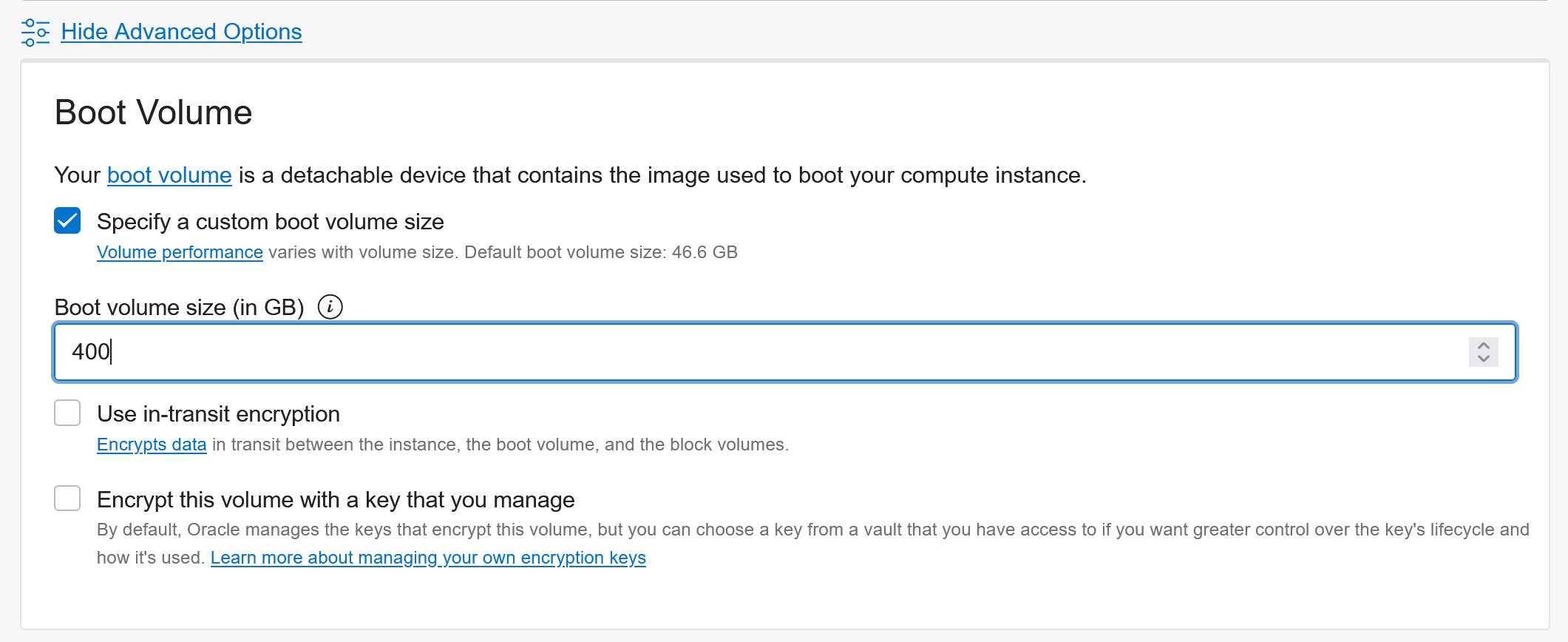

Under advanced you can configure the boot-volume size:

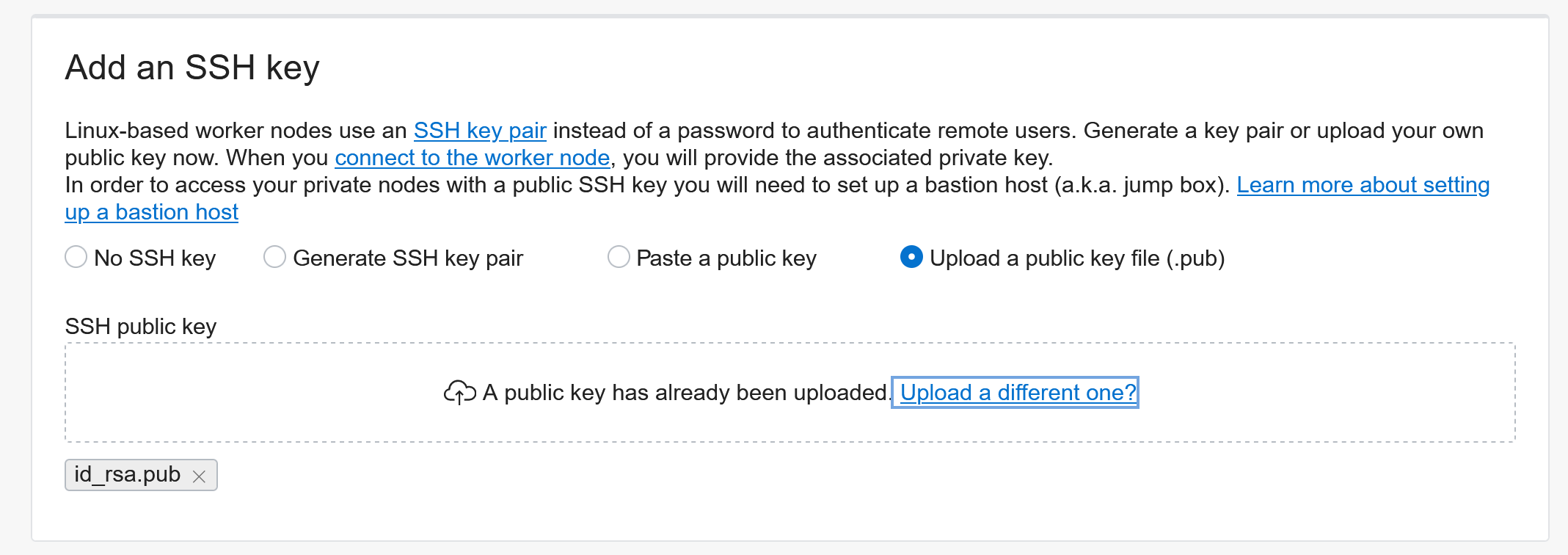

Add an SSH key for troubleshooting worker nodes:

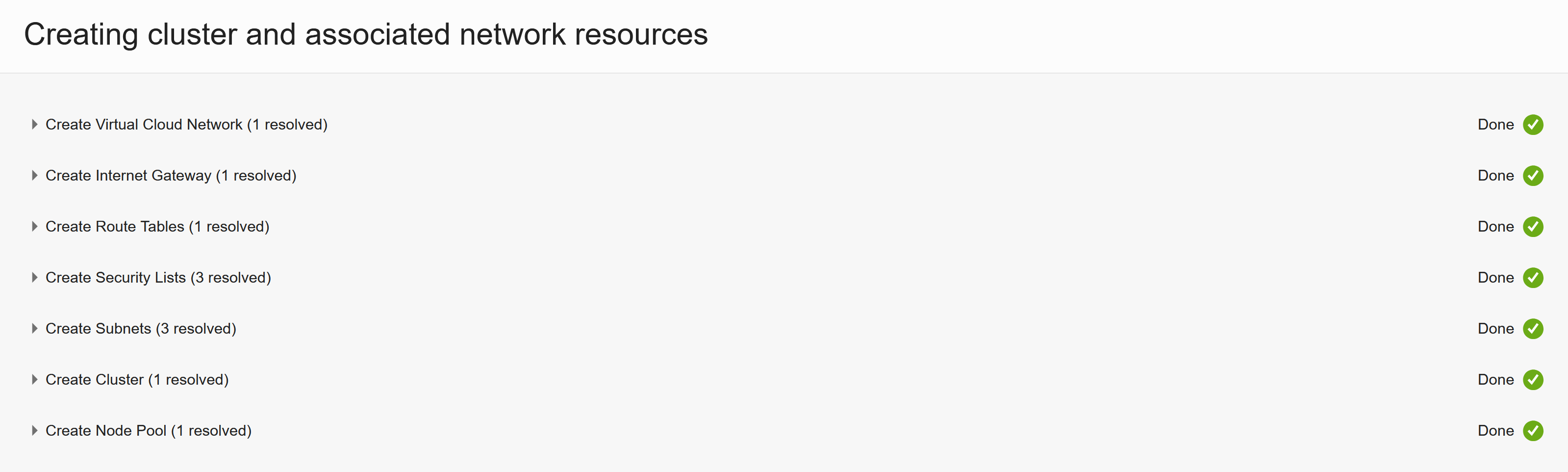

Review everything and then hit Create and it should go and set-up everything :)

That’s how easy it can be to setup a whole Kubernetes cluster :) Thanks OCI team for creating OKE!

It will take a few minutes until everything is up and running -> Coffee break?

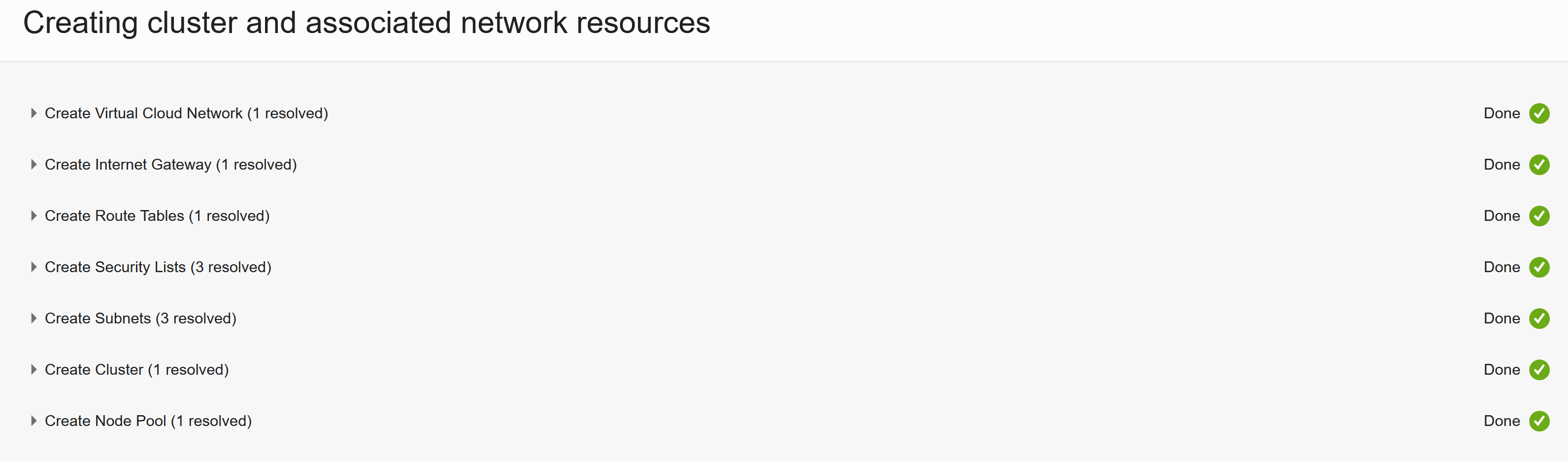

Once everything is ready:

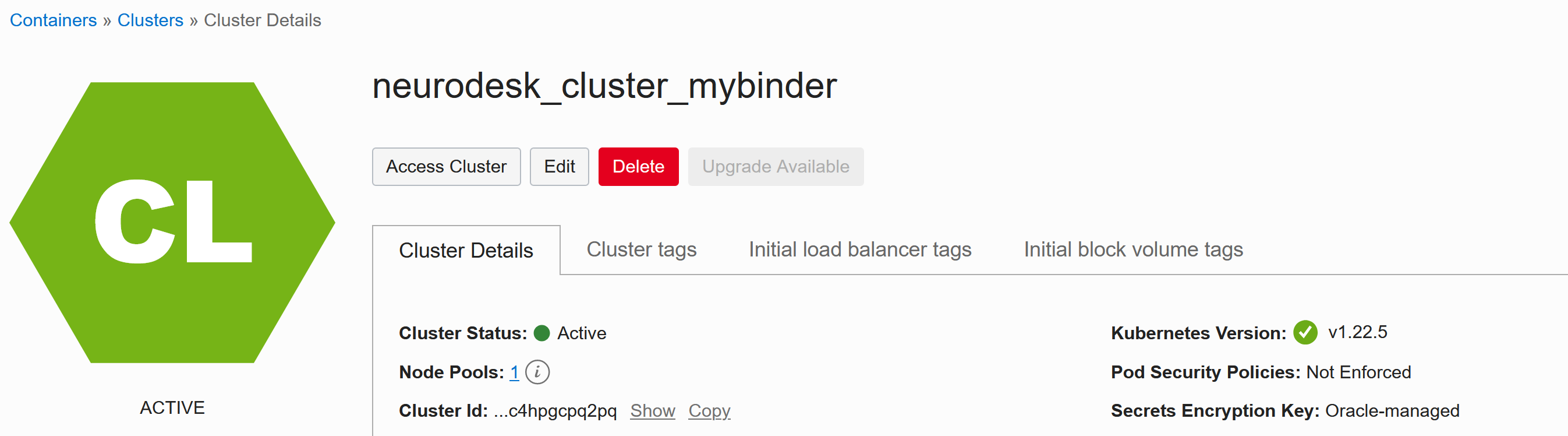

you can access the cluster and the settings are given when clicking Access Cluster:

Customizing nodes using Init-scripts

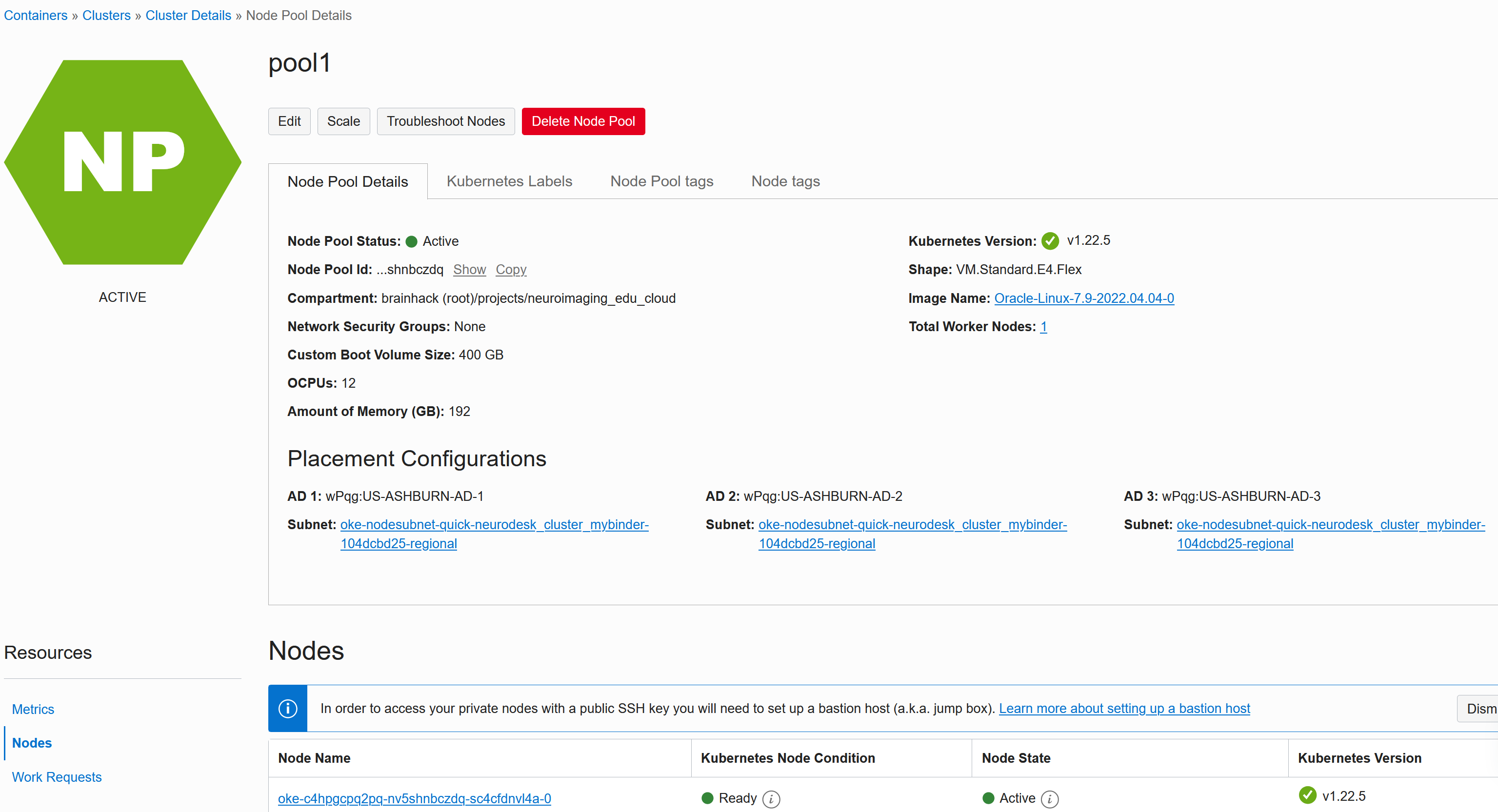

If you configured Public IP addresses for the worker nodes, then you can connect to the nodes for troubleshooting - Click on the node under Nodes -> pool1:

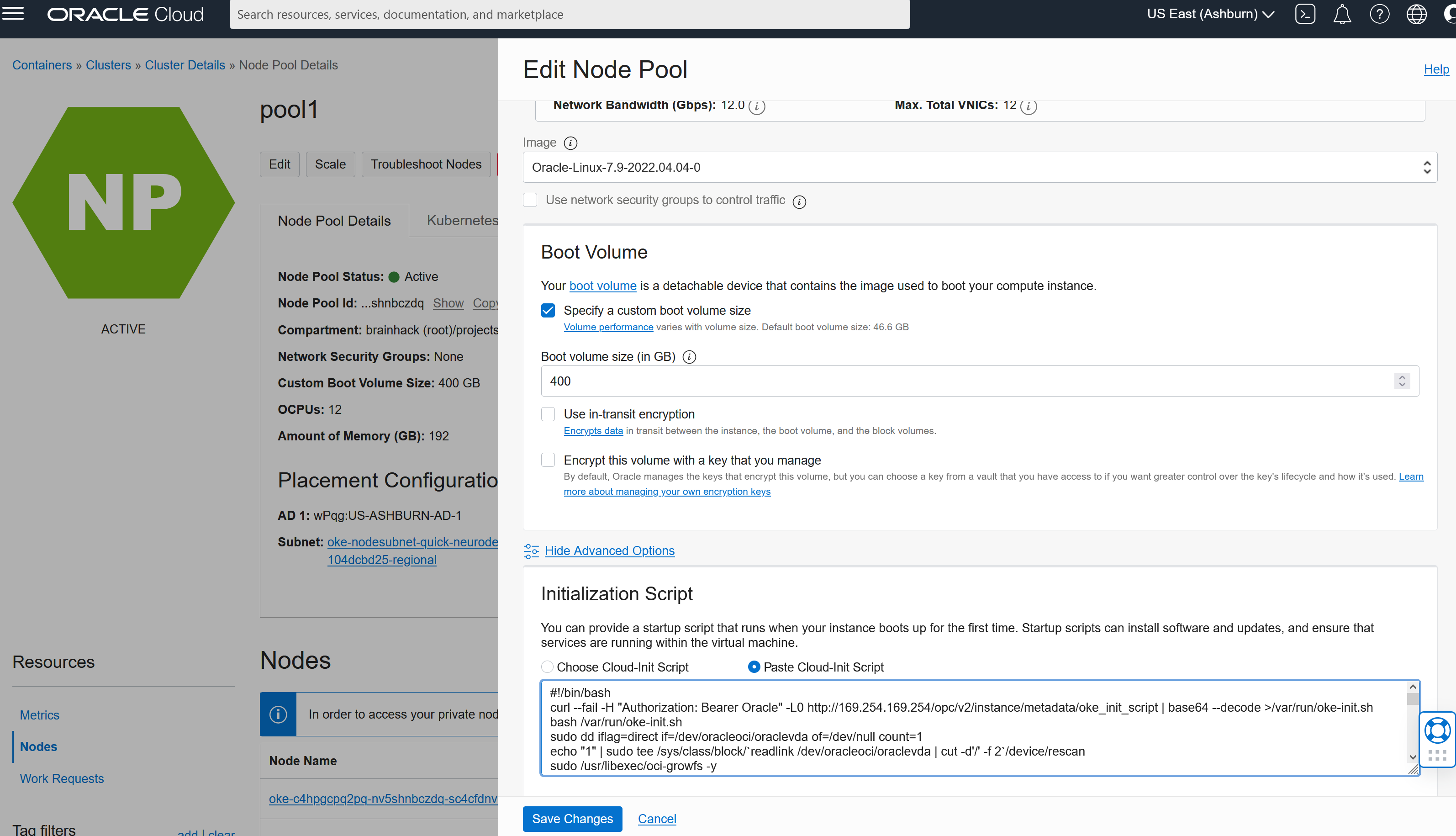

By default the disks are NOT expanded to the Bootvolume size you configured, so this can be fixed via init scripts. Edit the node pool and under advanced set the inits script:

This script will expand the disk:

#!/bin/bash

curl --fail -H "Authorization: Bearer Oracle" -L0 http://169.254.169.254/opc/v2/instance/metadata/oke_init_script | base64 --decode >/var/run/oke-init.sh

bash /var/run/oke-init.sh

sudo dd iflag=direct if=/dev/oracleoci/oraclevda of=/dev/null count=1

echo "1" | sudo tee /sys/class/block/`readlink /dev/oracleoci/oraclevda | cut -d'/' -f 2`/device/rescan

sudo /usr/libexec/oci-growfs -y

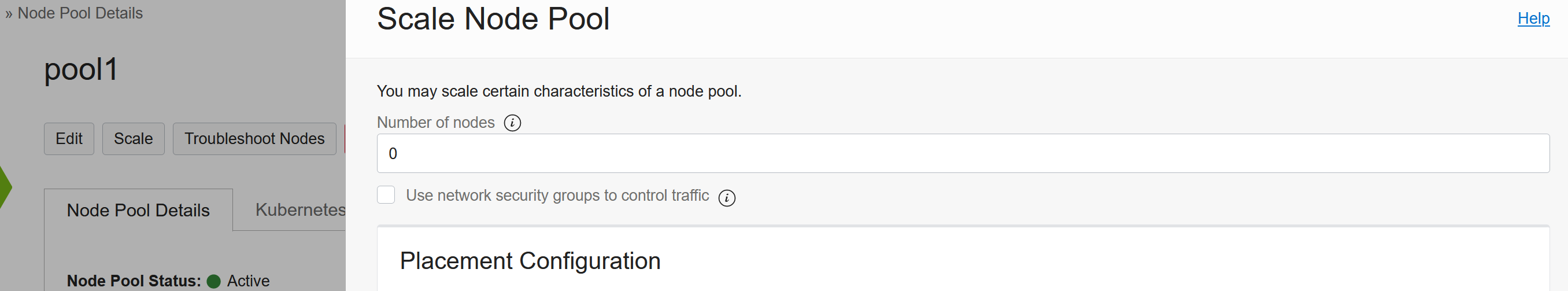

Then hit Save Changes. To apply these configuration changes you need to Scale the pool to 0 and then backup to 1:

Cleanup

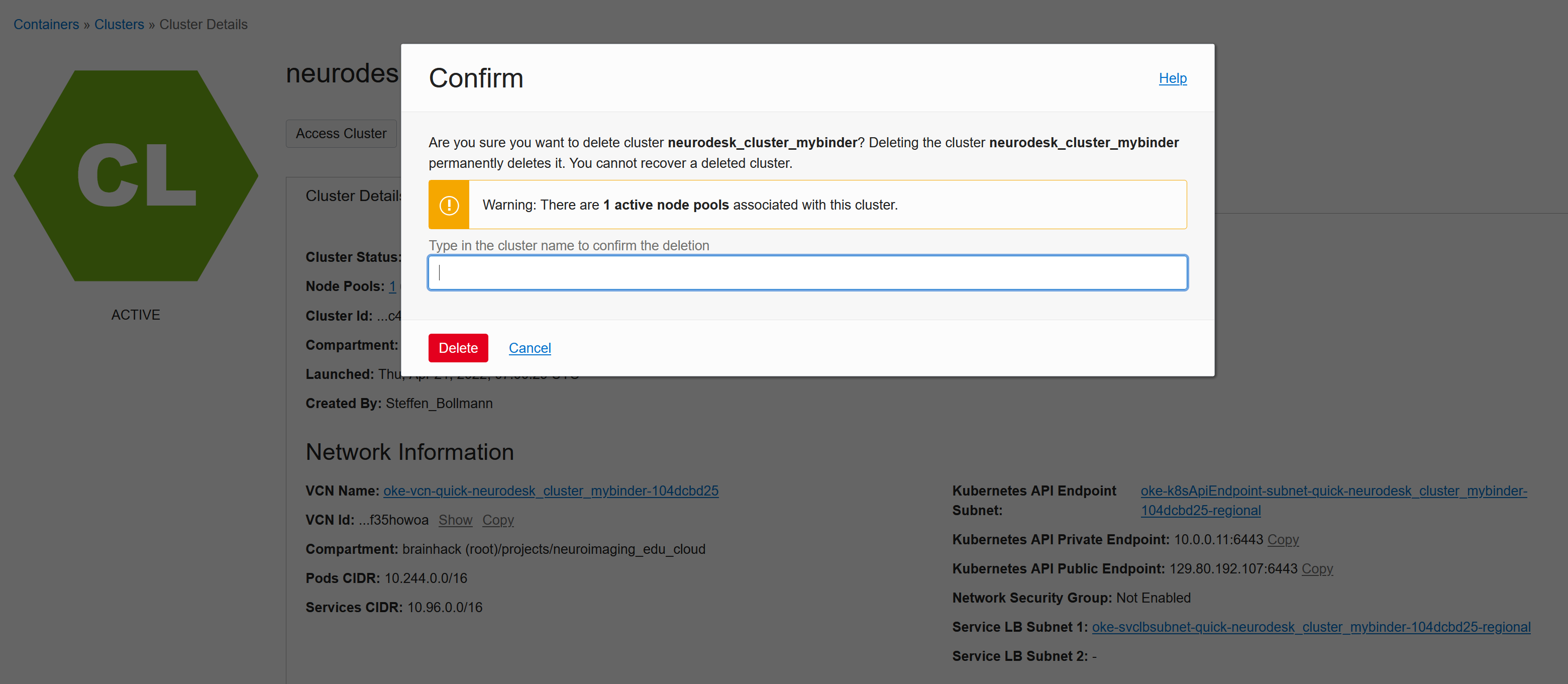

You can delete the whole cluster to cleanup:

But be aware that Kubernetes can create resources via API calls, which is great, but it also means that these additionally created resources (like load balancers or storage volumes) will NOT be cleaned up automatically and need to cleaned up manually!