Object Storage

Overview

Object Storage is like Dropbox … it allows you to simply store files and they can be accessible via links on the web (or APIs) and you don’t have to monitor the size of the disk (as opposed to block storage) and it comes with very nice features for mirroring your data to other regions or tiering data (making it cheaper if files are not accessed very often).

Setup a new Bucket

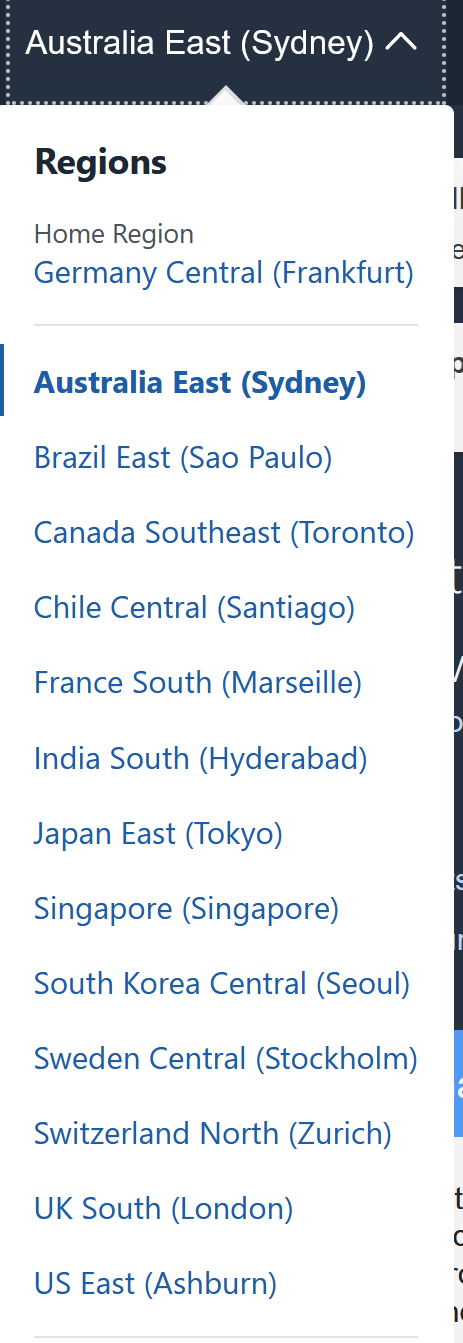

Select a Region from the list where you want your files to be located:

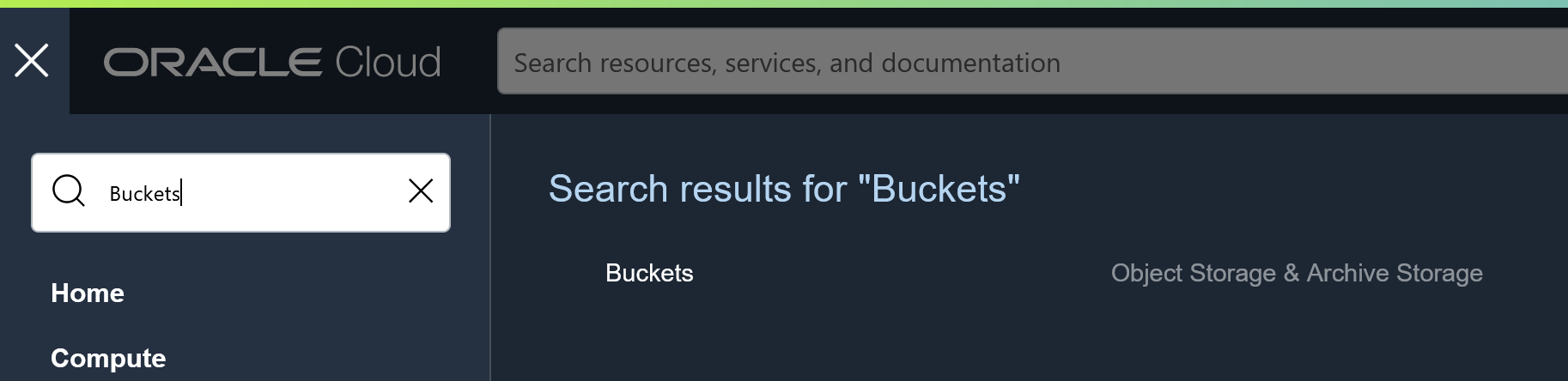

Then search for Buckets and you will find it under Storage -> Object Storage & Archival Storage -> Buckets:

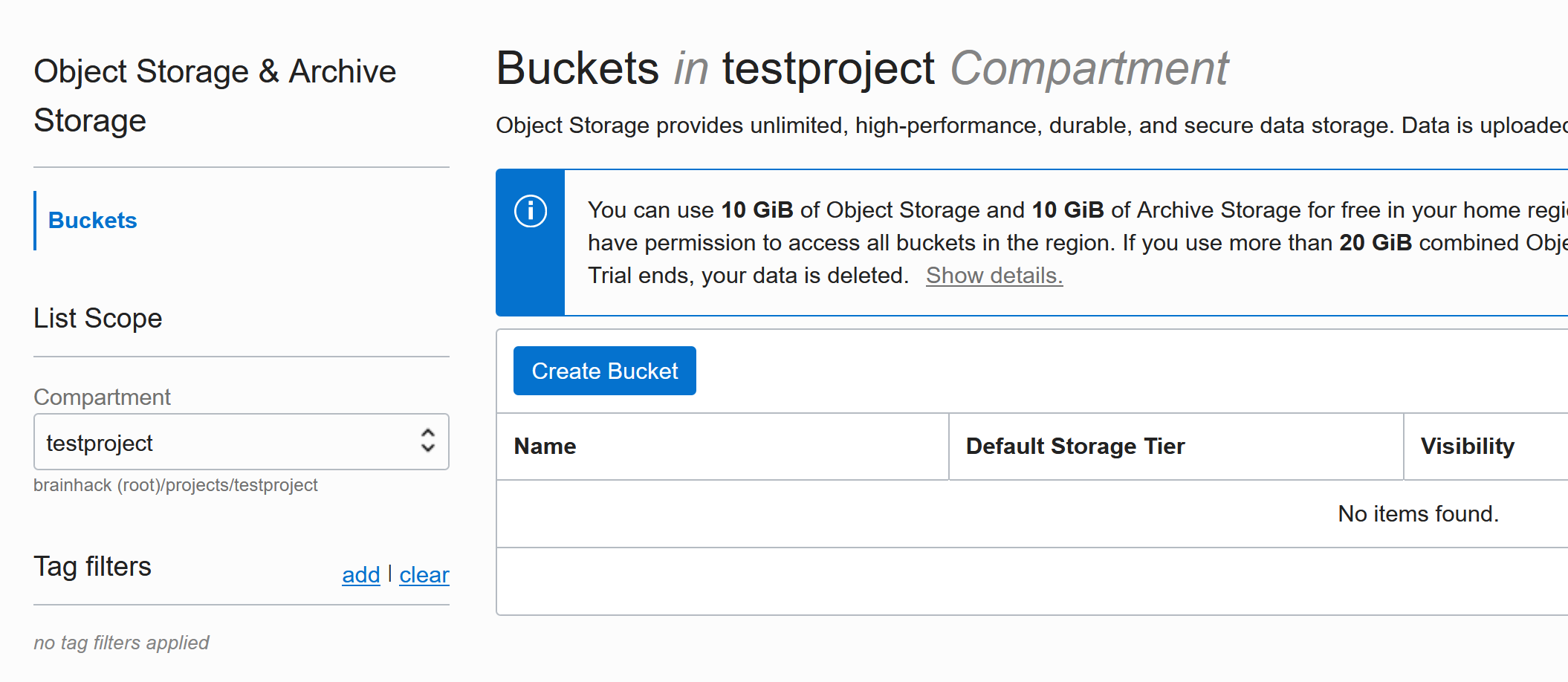

Select your project’s compartment:

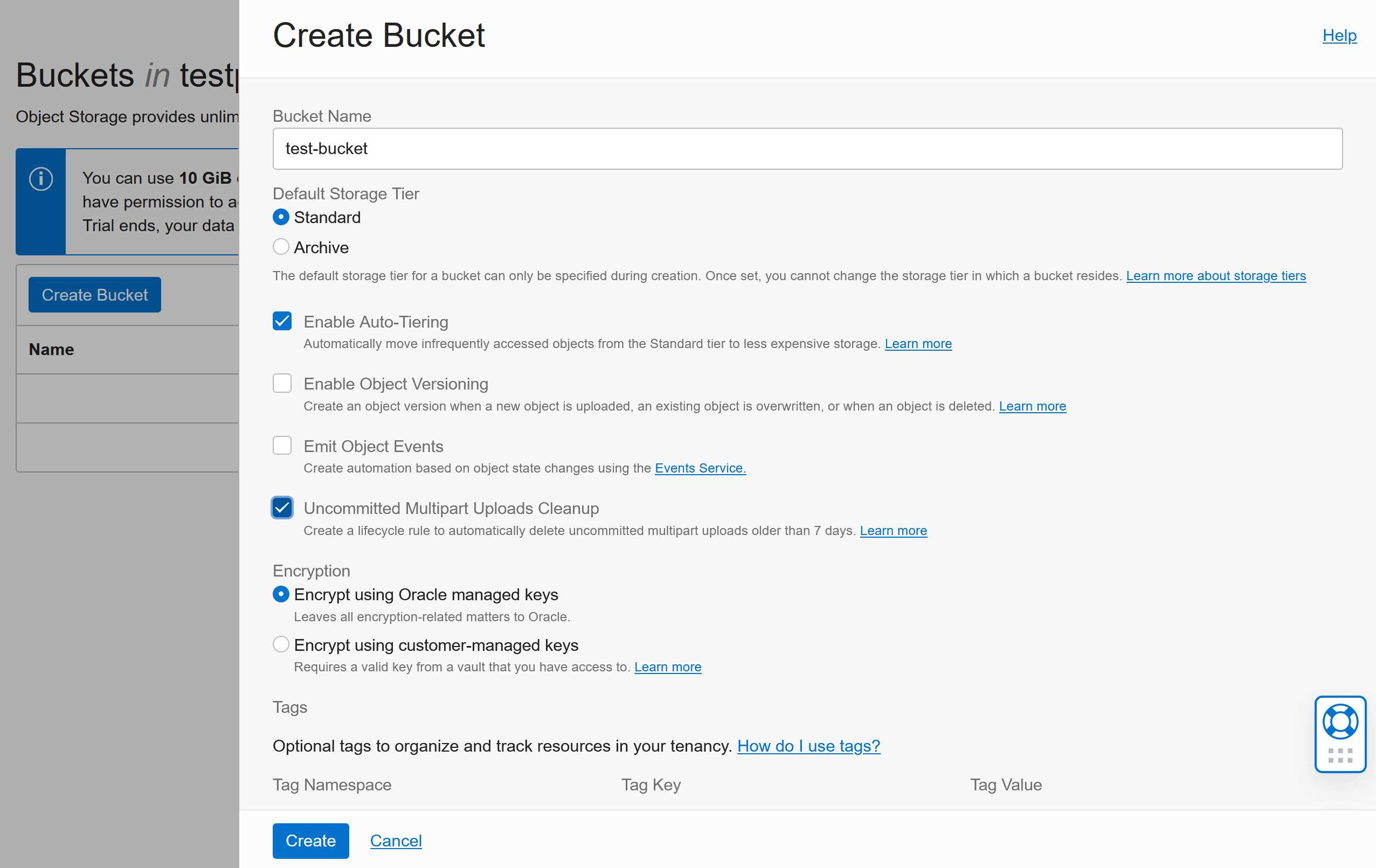

Create a new Bucket:

- give it a name

- In addition to the defaults we recommend

Enable Auto-Tiering(this will make the storage cheaper by moving objects to lower tier storage if they are not used frequently) andUncommitted Multipart Uploads Cleanup(this will clean up in case uploads failed halfway)

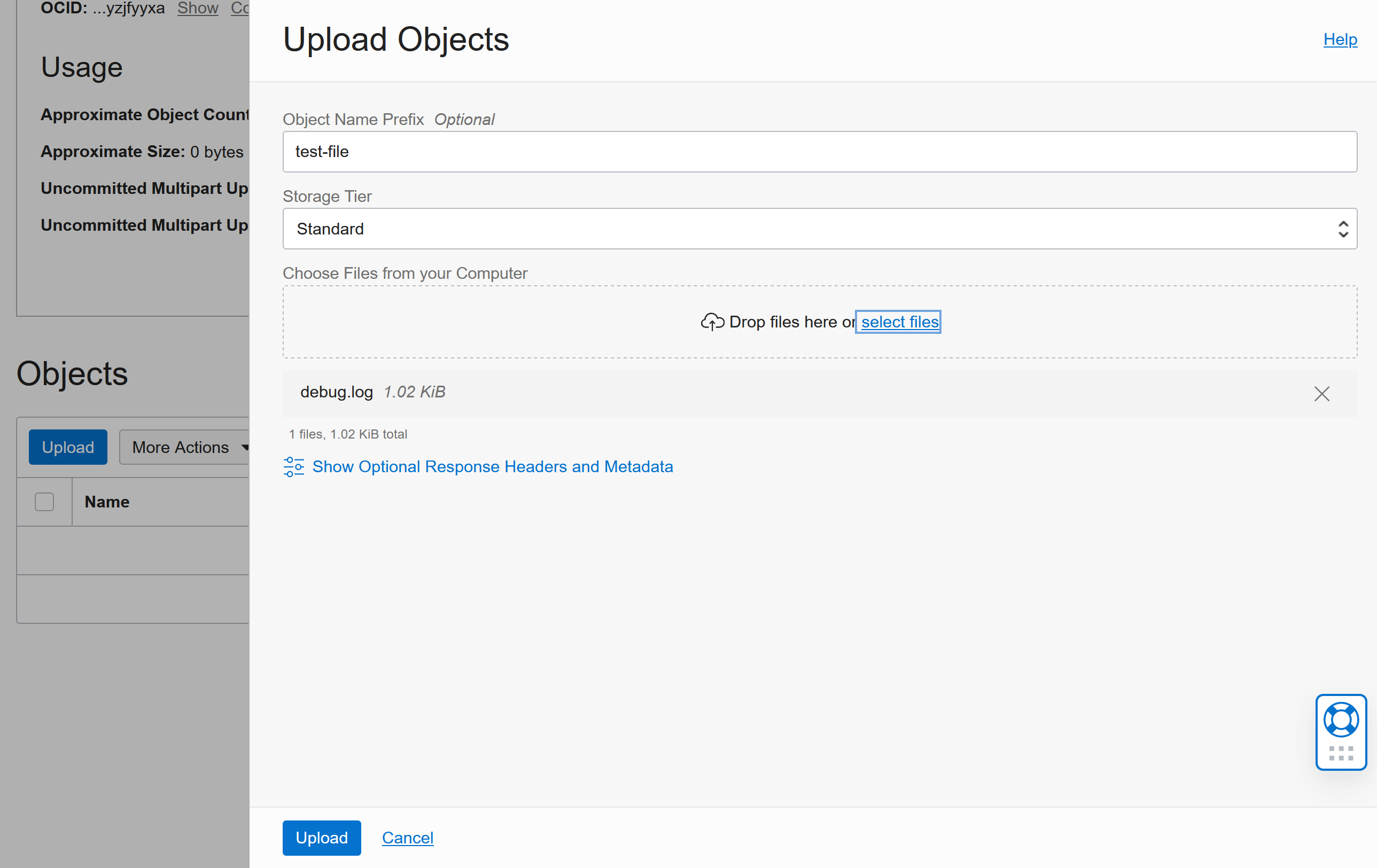

Uploading files to a bucket

You could upload files via the GUI in the Oracle cloud by clicking the Upload button:

You could also use tools like rclone or curl or the OCI CLI to upload files (more about these tools later)

Making a Bucket public

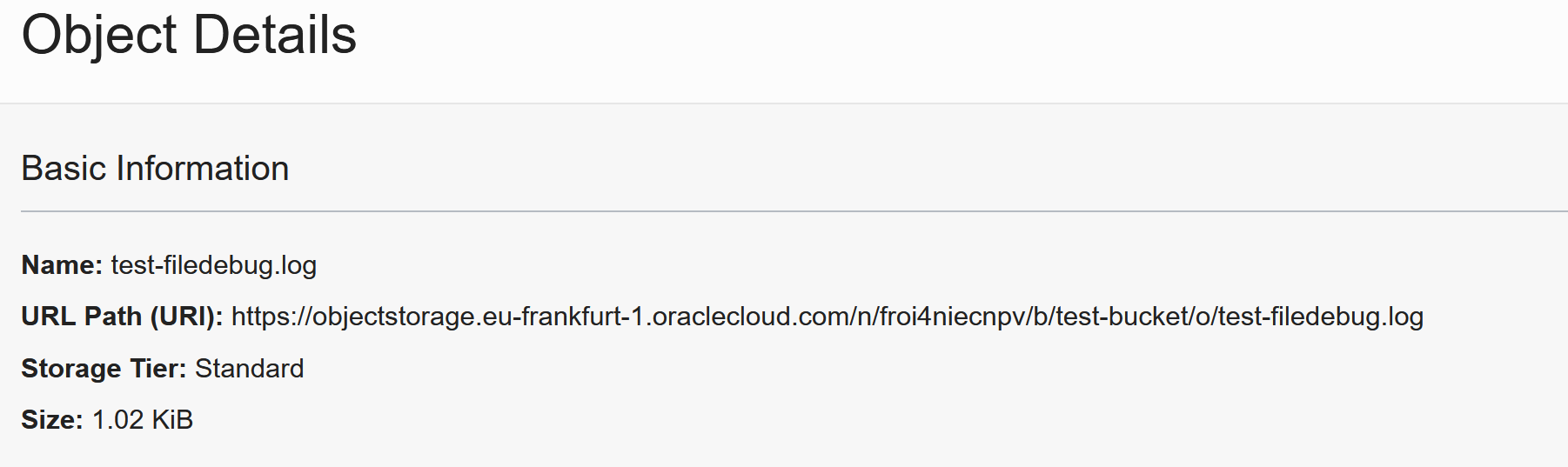

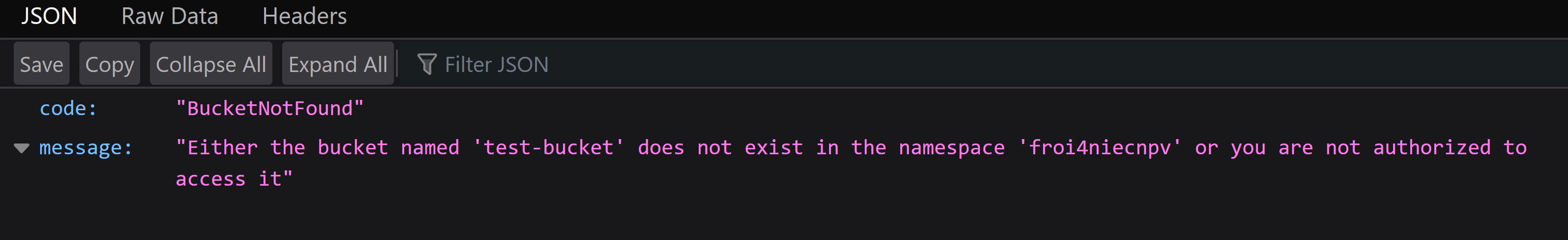

By default, the files in the bucket will not be visible to everyone. Let’s find the URL to the file we just uploaded: Click on the 3 dots next to the file and click on View Object details:

When opening this URL, you will get this error:

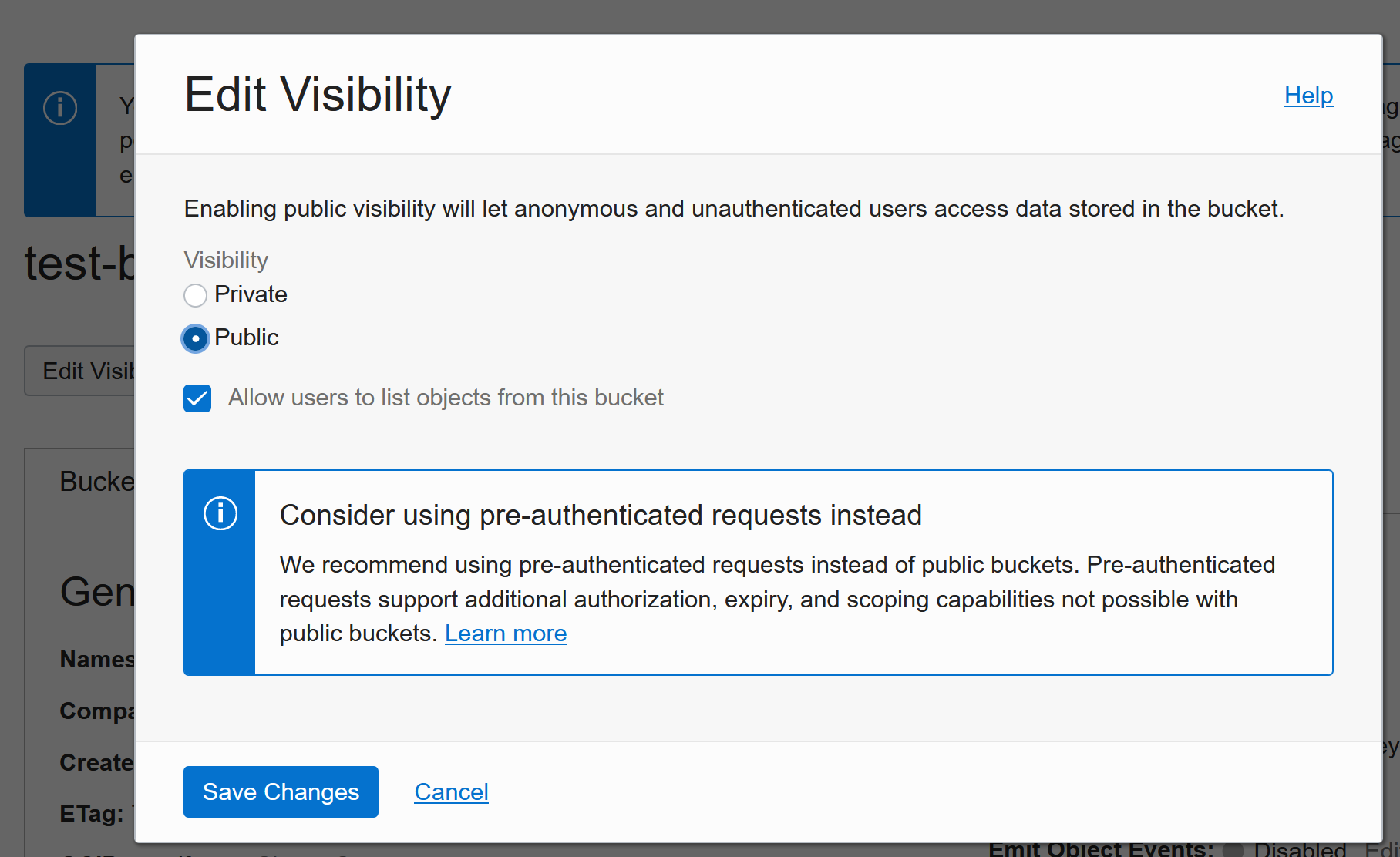

You can either make the WHOLE bucket visible to the world or use “Pre-Authenticated Requests”. Let’s start with the easy (and less control/secure) way first:

Click on Edit Visibility and switch to public:

Now the file and EVERYTHING else in the bucket are visible to EVERYONE on the internet.

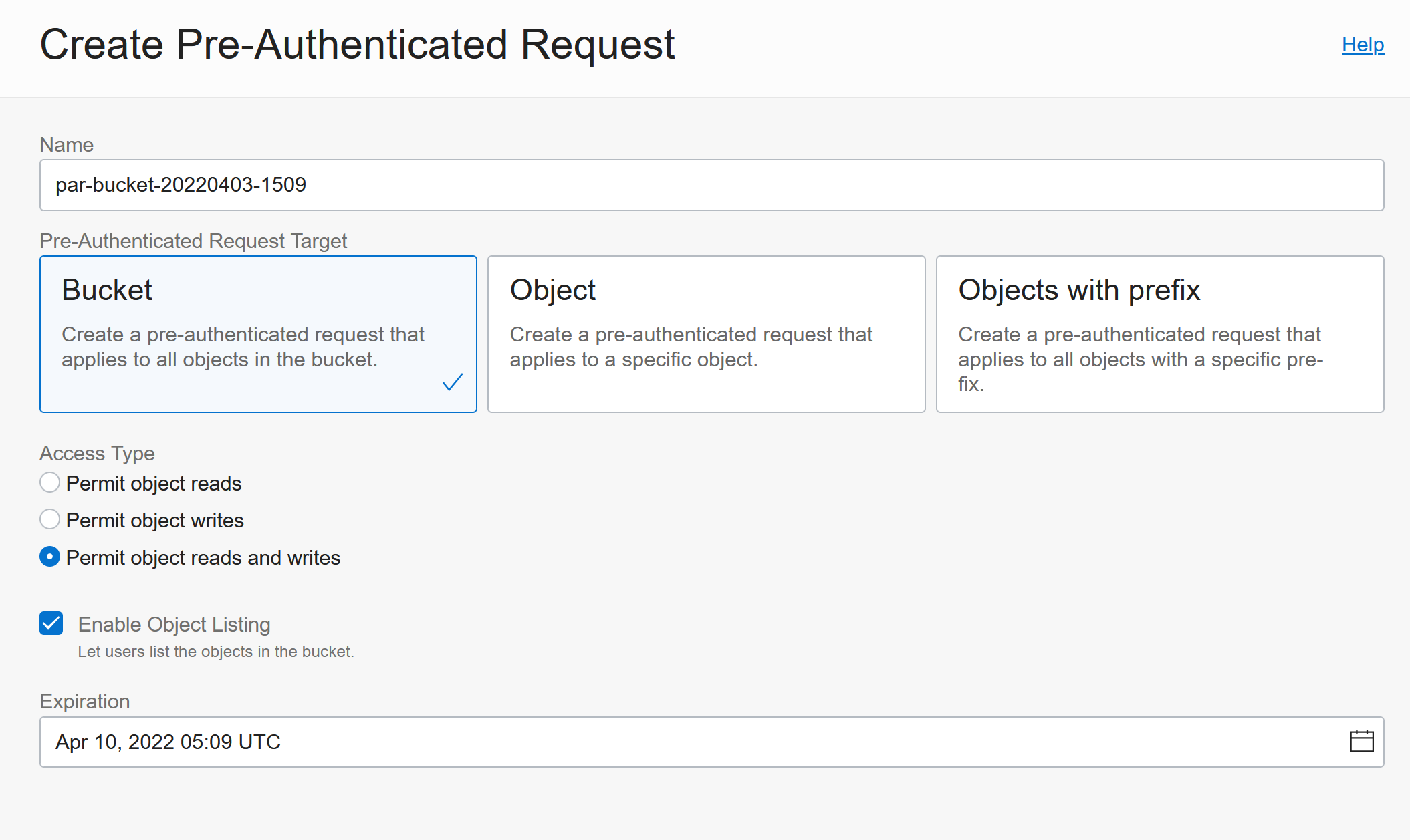

Pre-Authenticated Requests

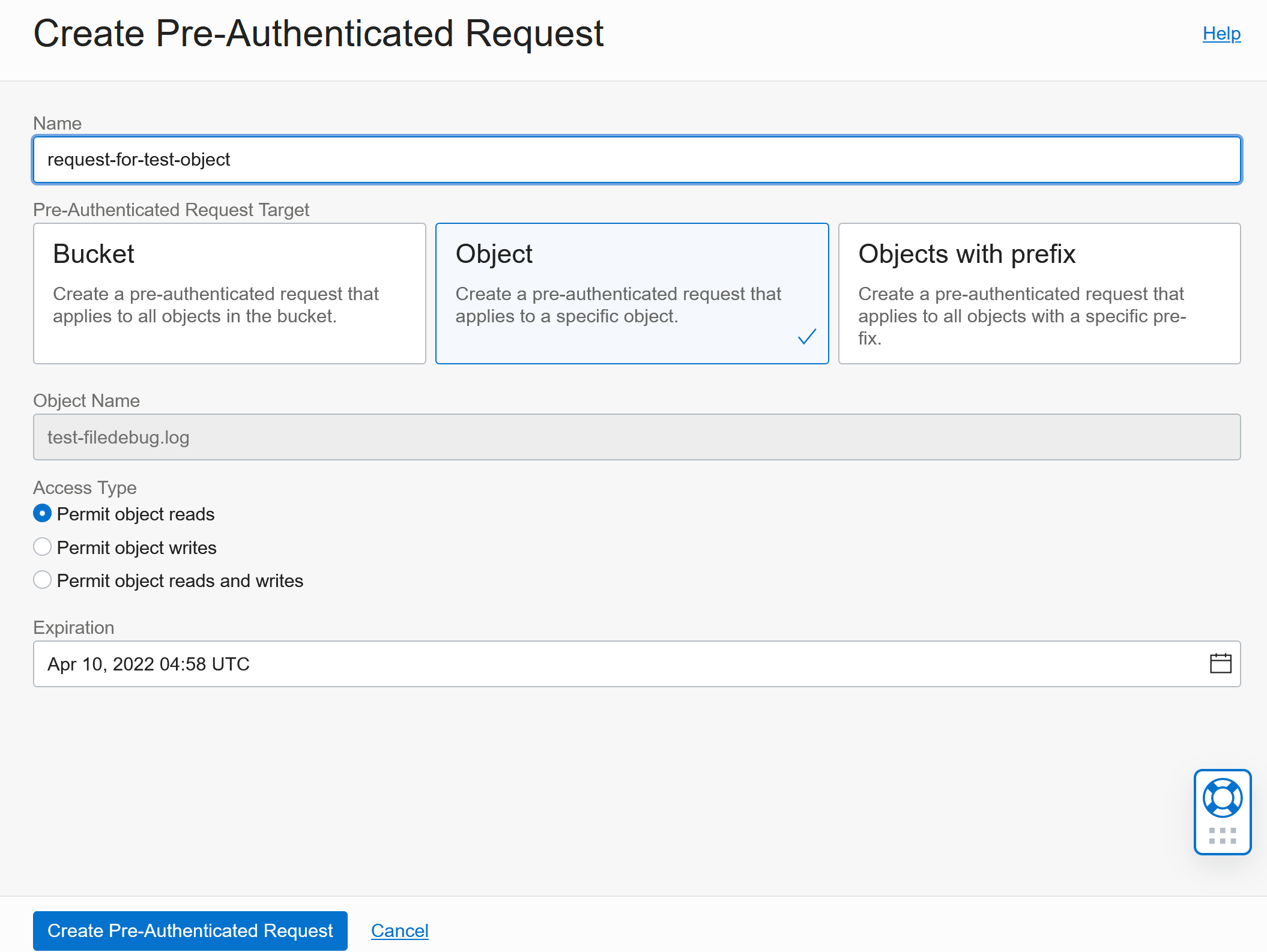

Click on the three dots next to the file again and Click Create Pre-Authenticated Request:

This gives you more options to control access and you can also expire the access :)

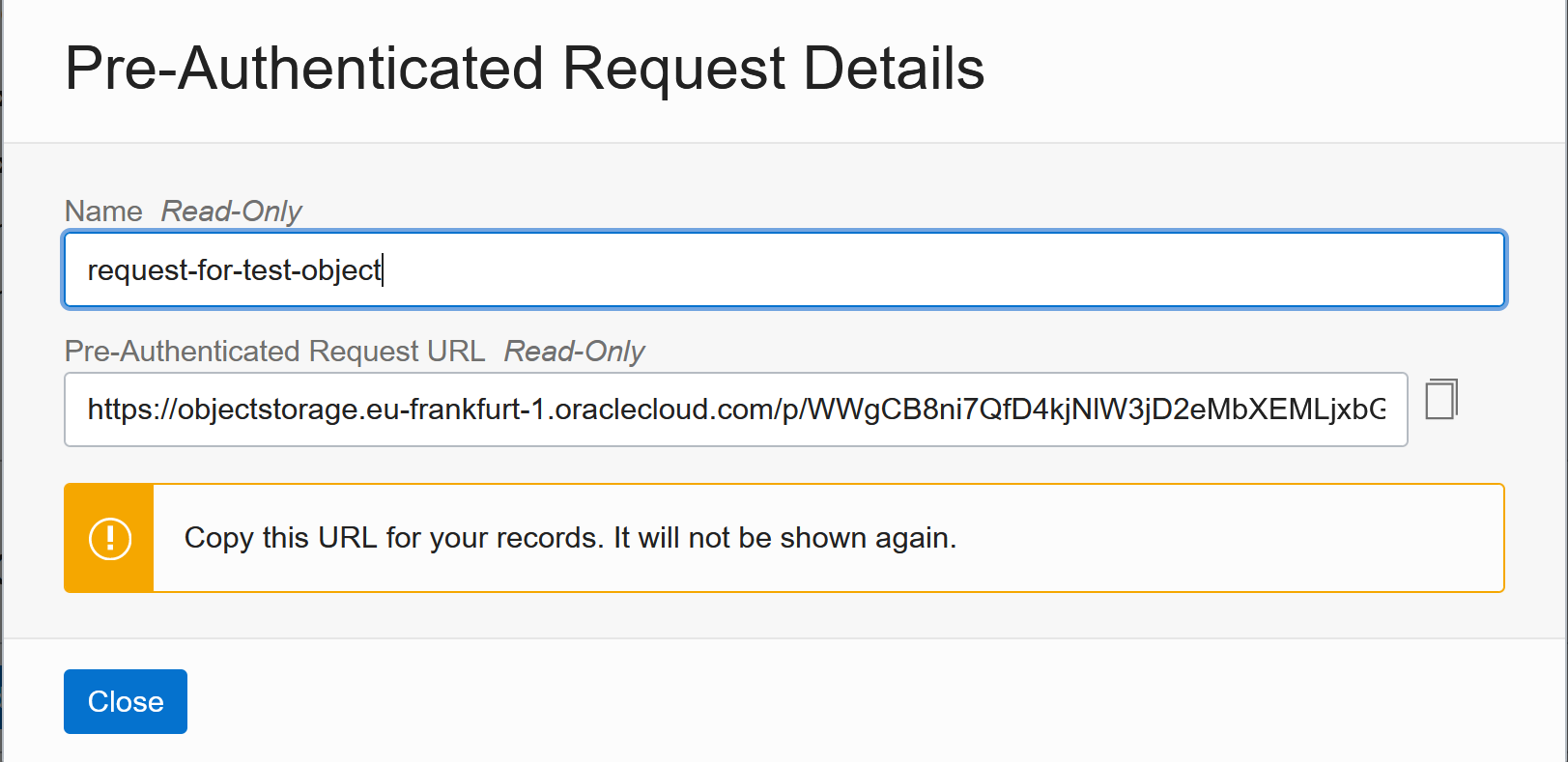

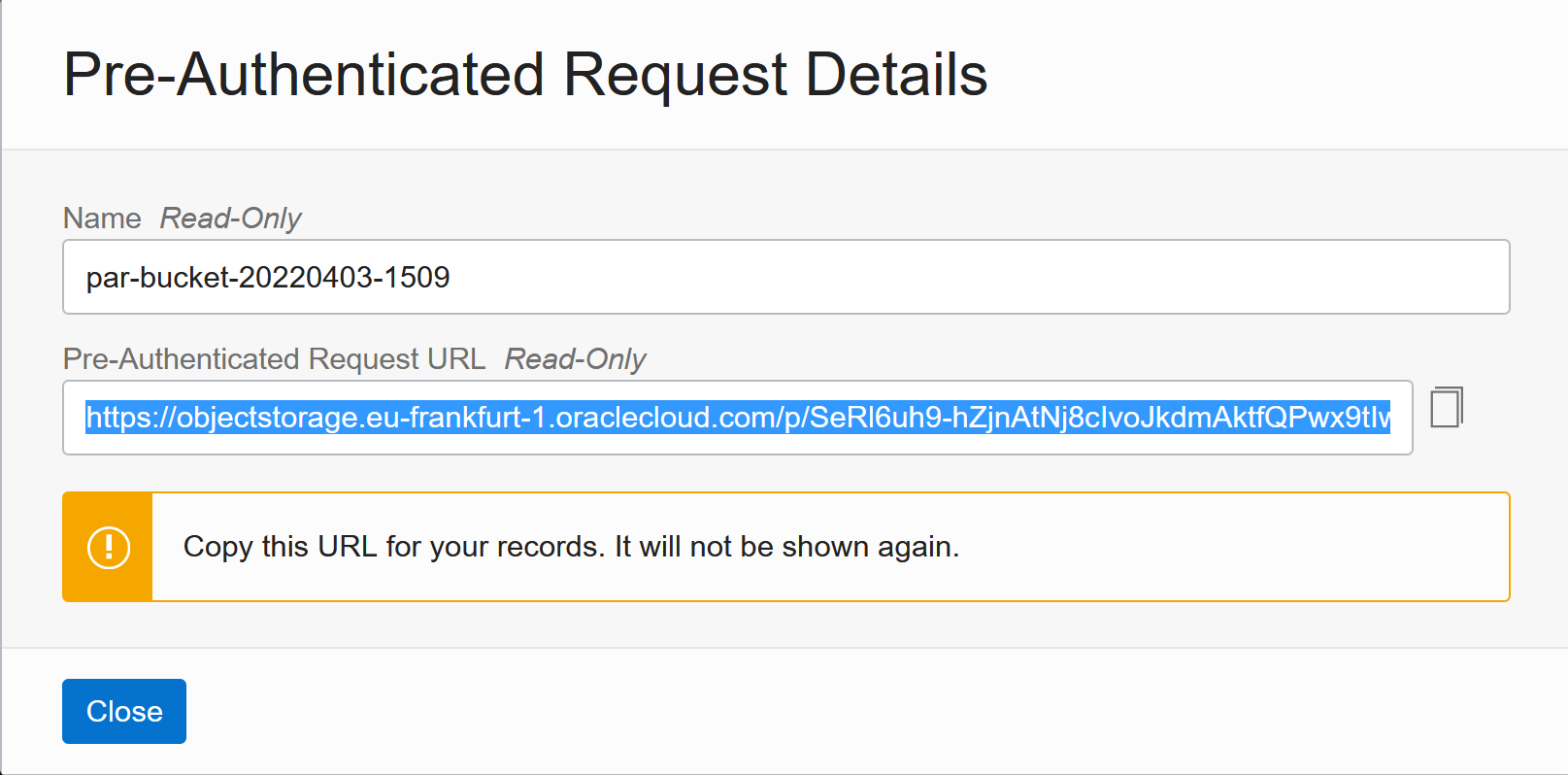

And you then get a specific URL to access the file (or the bucket or the files you configured):

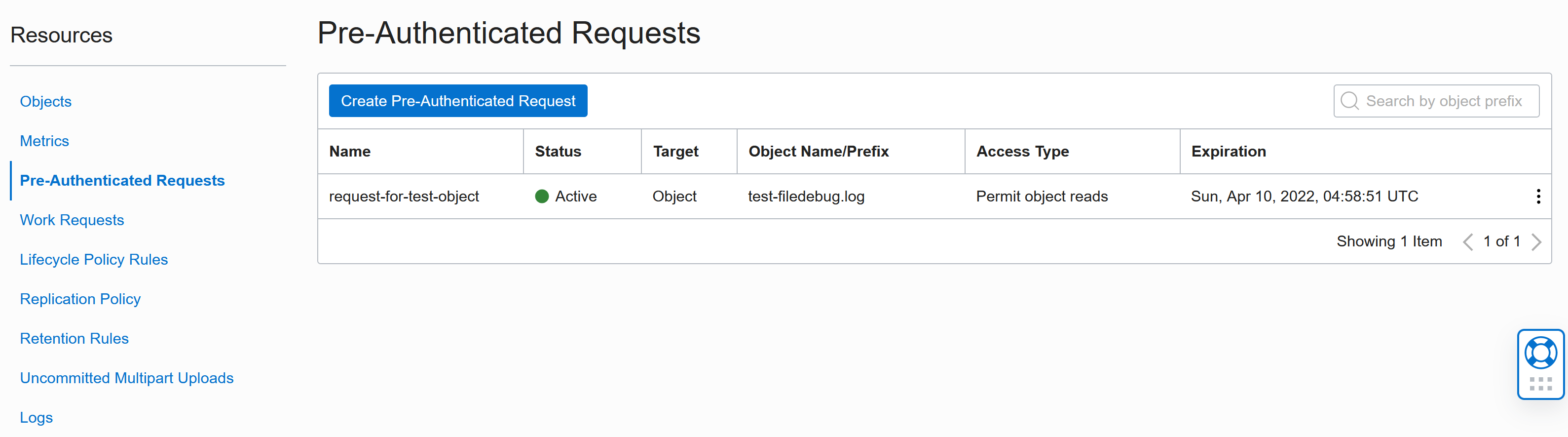

The URL will stop working when it expires or when you delete the Request. You find all requests under Pre-Authenticated Requests in the Resources menu:

Tiering

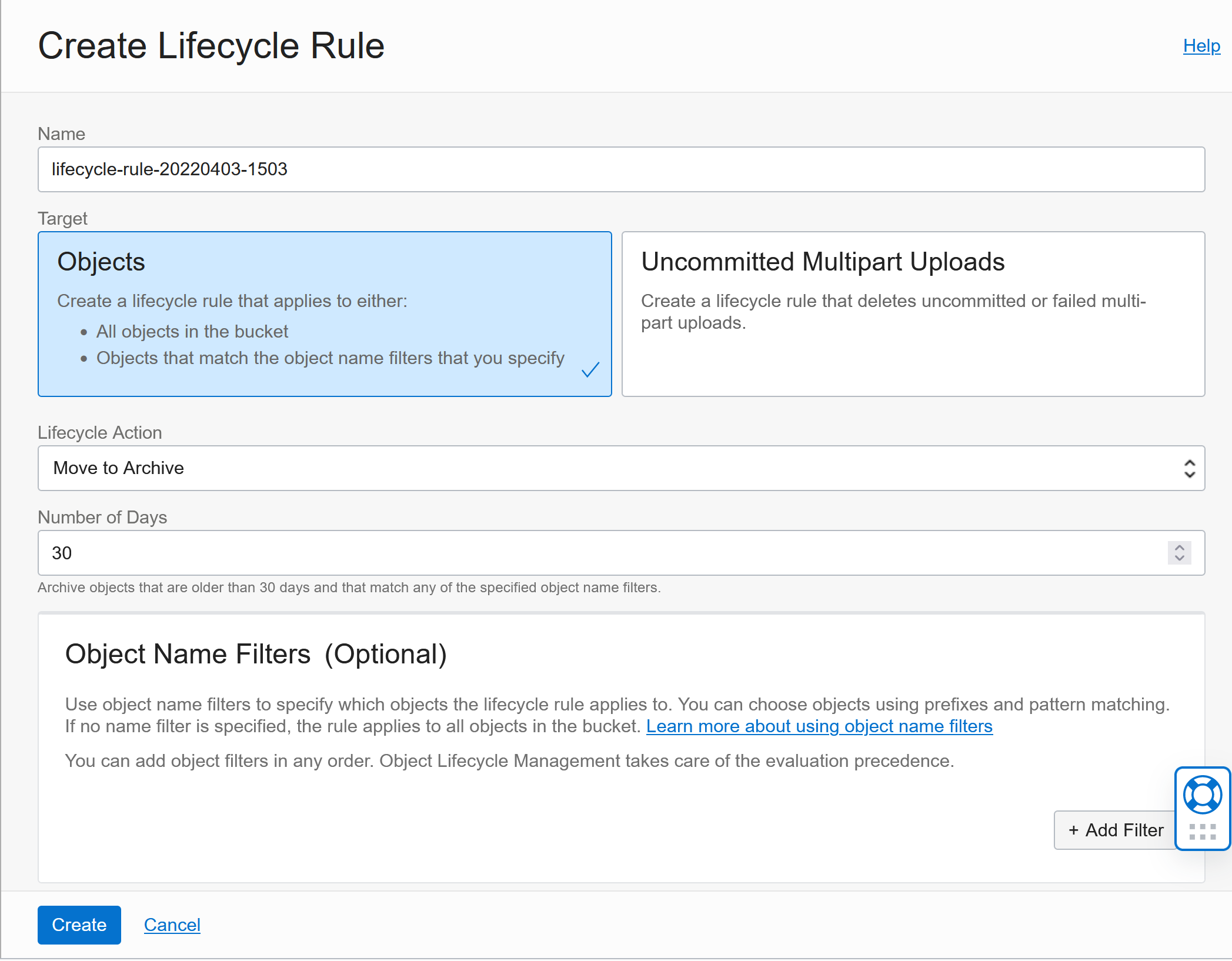

Lifecycle Rules allow you to control what happens with files after a certain amount of time. You can delete them or move them to Archival storage for example:

Mirroring

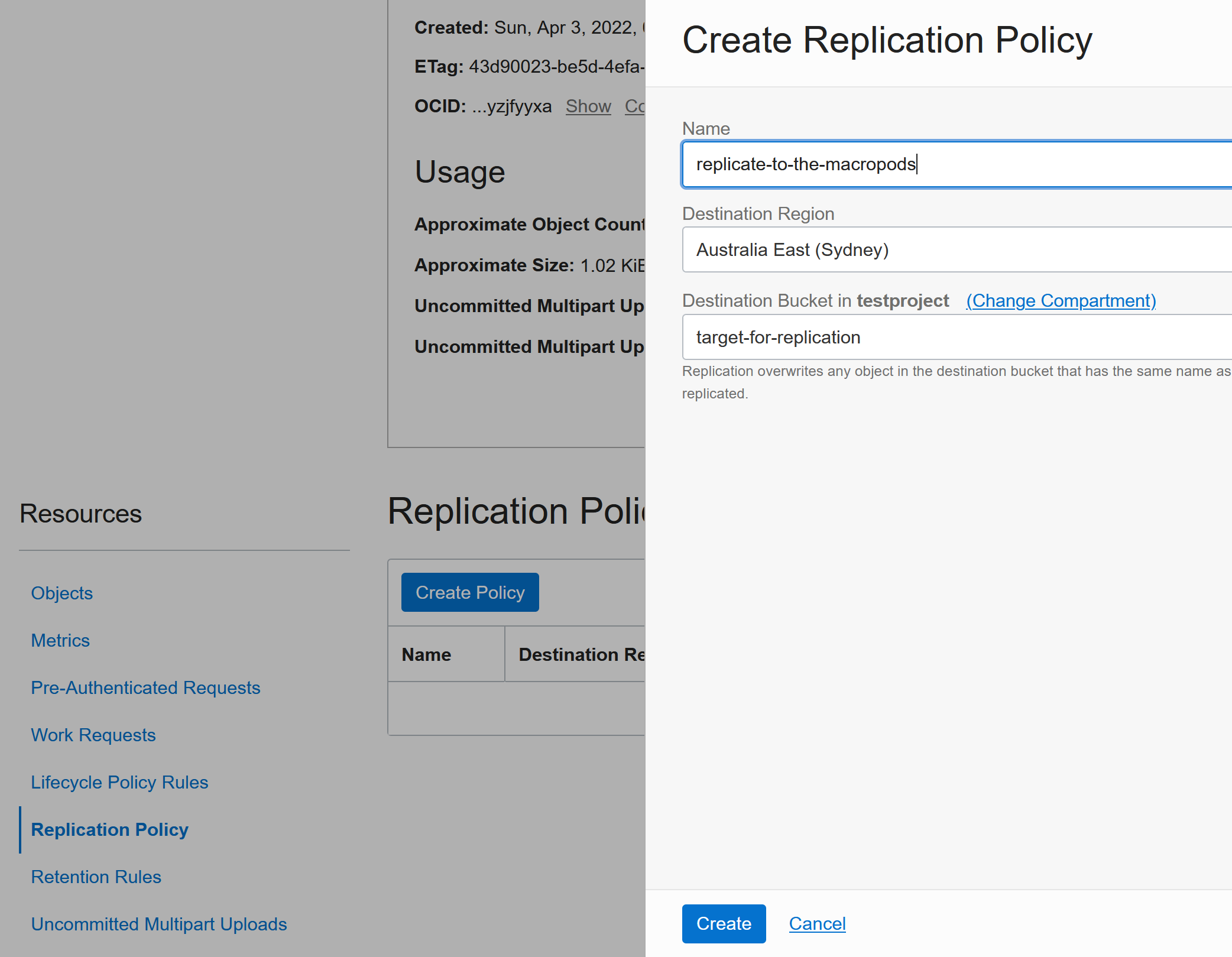

Mirroring allows you to keep the bucket up-to-date with another bucket in another region (e.g. main bucket is in Europe and the replica is in Australia). This is controlled under the Replication Policy.

You first need to create the target bucket in the other region and then you can configure it as a target:

Mounting a Bucket inside a VM:

First we need to install a few things on the VM (assuming Oracle Linux here):

sudo rpm -Uvh https://dl.fedoraproject.org/pub/epel/epel-release-latest-7.noarch.rpm

sudo yum update -y

sudo yum install -y epel-release

sudo yum install -y redhat-lsb-core

sudo yum install -y s3fs-fuse

Create a new bucket as described in the beginning of this page above.

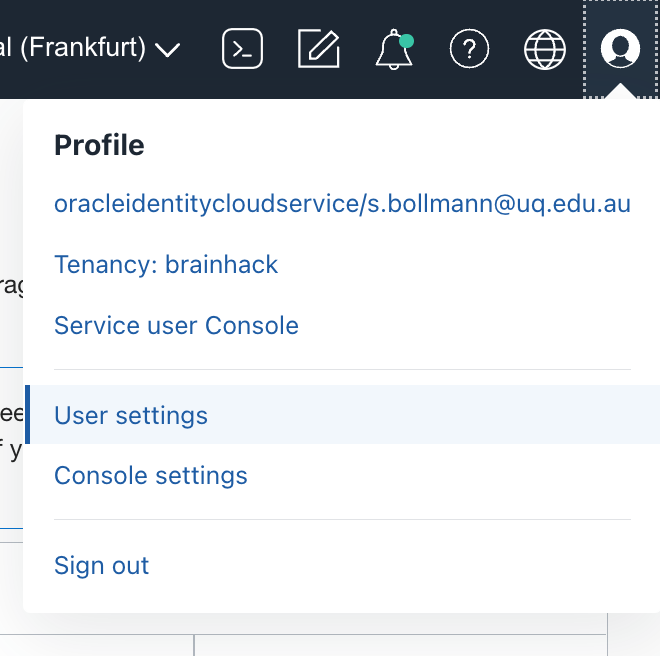

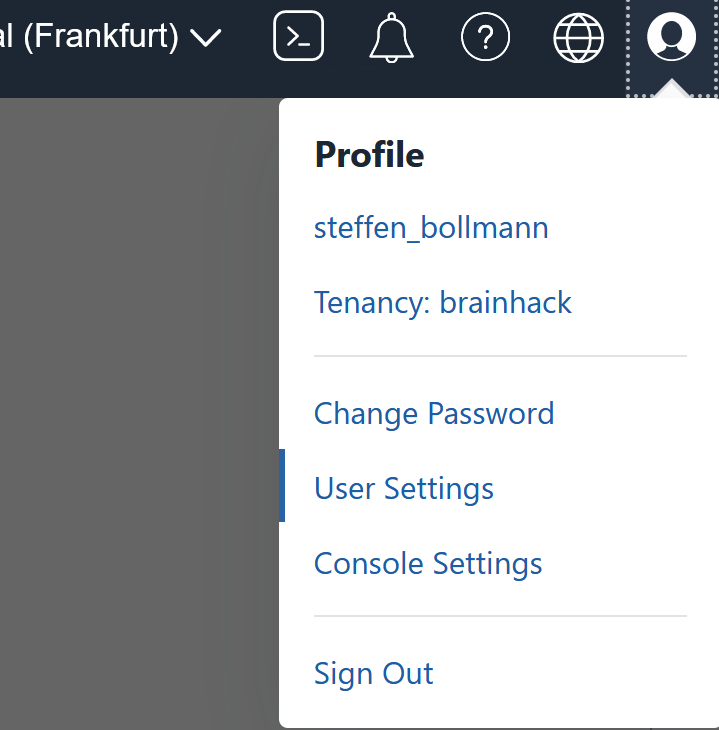

Next we need to create a Customer Secret Key. For this click on the user icon in the top right corner and select User settings.

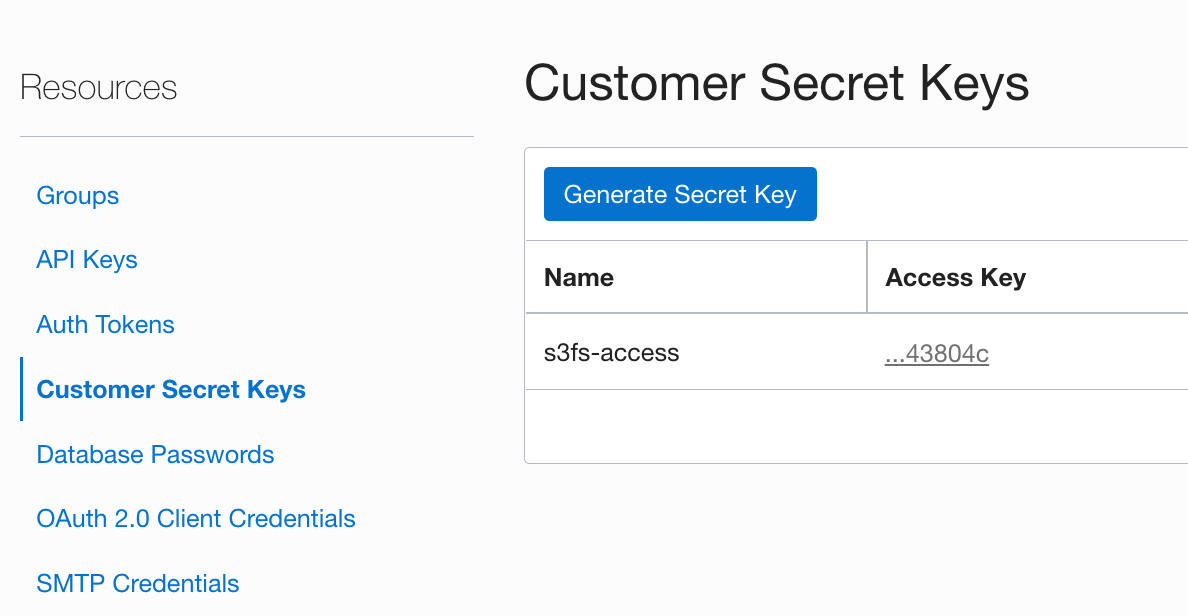

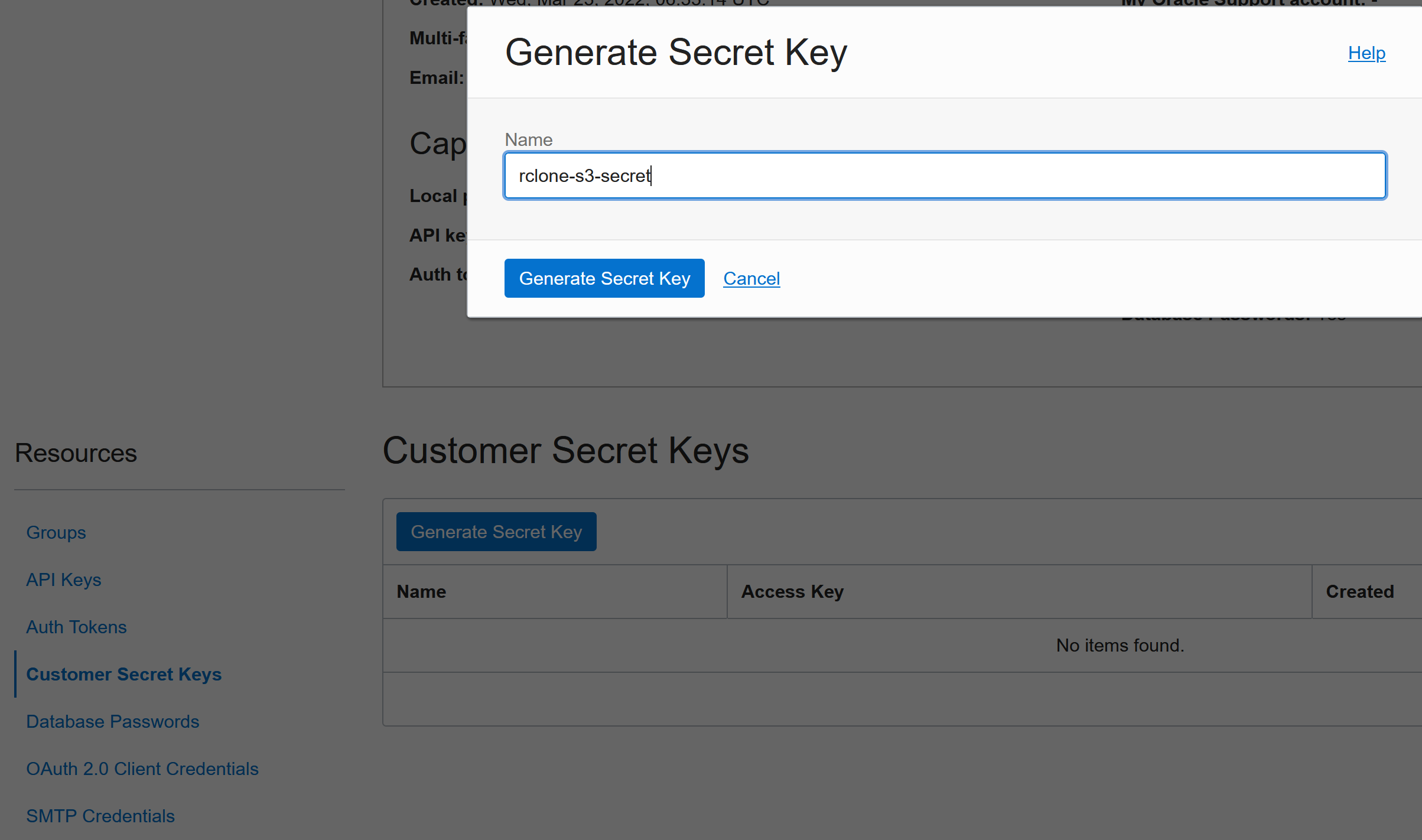

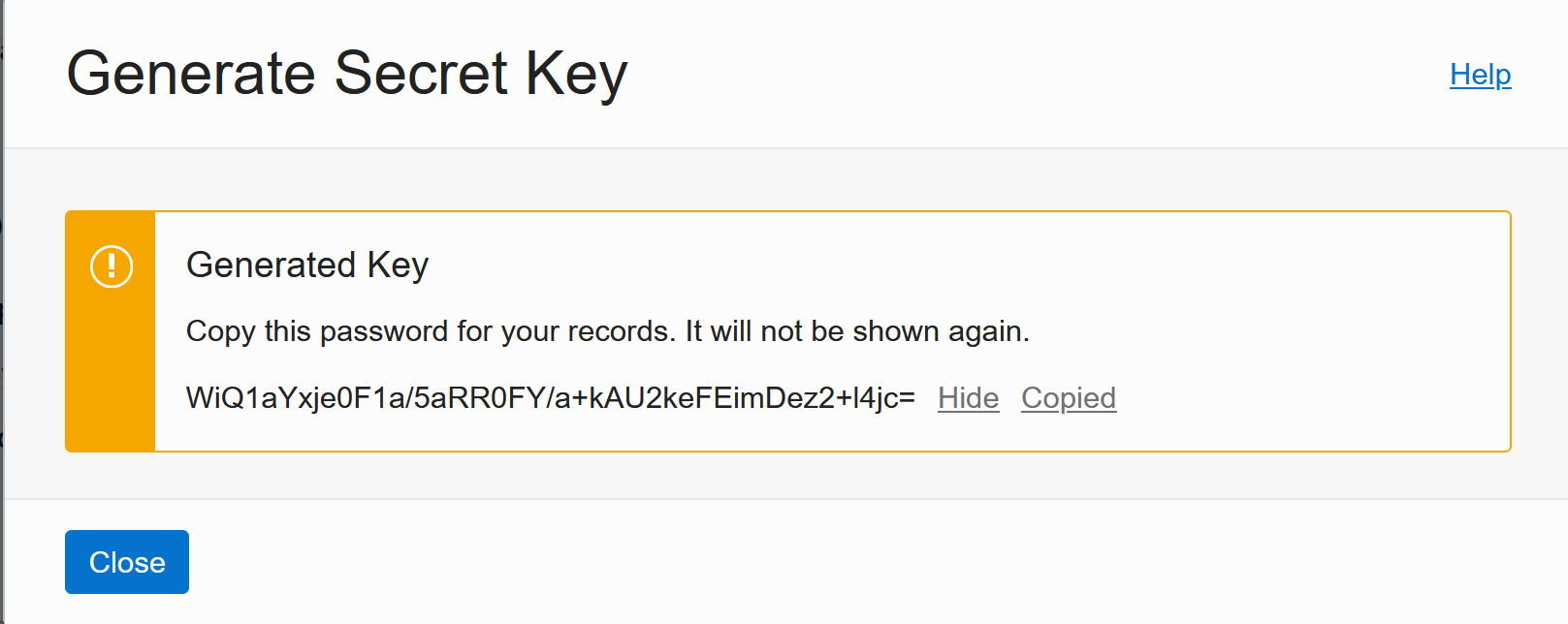

Then in the Resources menu on the left select “Customer Secret Keys” and click Generate Secret Key. Give it a name and then copy the secret (it will never be shown again!). Then copy the Access Key shown in the table as well in a separate notepad.

Now you need to store these two things inside ~/.passwd-s3fs:

echo FILL_IN_YOUR_ACCESS_KEY_HERE:FILL_IN_YOUR_SECRET_HERE > ${HOME}/.passwd-s3fs

and then you can mount the bucket your created earlier:

chmod 600 ${HOME}/.passwd-s3fs

sudo chmod +x /usr/bin/fusermount

sudo mkdir /data

sudo chmod a+rwx /data

s3fs FILL_IN_YOUR_BUCKET_NAME /data/ -o endpoint=eu-frankfurt-1 -o passwd_file=${HOME}/.passwd-s3fs -o url=https://froi4niecnpv.compat.objectstorage.eu-frankfurt-1.oraclecloud.com/ -onomultipart -o use_path_request_style

The bucket is now accessible under /data and you can almost treat it like a volume mount (but it’s not 100% posix complient).

Uploading files using CURL

To enable this you need to create a Pre-Authenticated Request which allows access to the Bucket and it allows objects read and write and Object Listing:

Then copy the URL, as it will never be shown again:

Now you can use curl to upload files:

curl -v -X PUT --upload-file YOUR_FILE_HERE YOUR_PRE_AUTHENTICATED_REQUEST_URL_HERE

Uploading files using RCLONE

Rclone is a great tool for managing the remote file storages. To link it up with Oracles object storage you need to configure a few things (the full version is here: https://blogs.oracle.com/linux/post/using-rclone-to-copy-data-in-and-out-of-oracle-cloud-object-storage#:~:text=%20Using%20rclone%20to%20copy%20data%20in%20and,which%20Rclone%20will%20be%20used%20to...%20More%20):

- The Amazon S3 Compatibility API relies on a signing key called a Customer Secret Key. You need to create this in your User’s settings:

Then Click on Generate Secret Key under Customer Secret Keys:

Save the Secret Key for later:

Then save the Access Key from the table as well.

- Find out where your rclone config file is located:

rclone config file

- Add this to your rclone config file:

[myobjectstorage]

type = s3

provider = Other

env_auth = false

access_key_id = <ACCESS KEY>

secret_access_key = <SECRET KEY>

endpoint = froi4niecnpv.compat.objectstorage.<REGION>.oraclecloud.com

Replace ACCESS KEY and SECRET KEY with the ones generated earlier. Replace REGION with the region where the storage bucket is located (e.g. eu-frankfurt-1).

Now you can use rclone to for example list files in the bucket:

rclone ls myobjectstorage:/test-bucket

or you can upload files or whole directories (or download by reversing the order of Target/Source):

rclone copy YOURFILE_or_YOURDIRECTORY myobjectstorage:/test-bucket

or you can sync whole directories or other remote storage locations (includes deletes!):

rclone sync YOURDIRECTORY_OR_YOUR_OTHER_RCLONE_STORAGE myobjectstorage:/test-bucket